Sunday, April 30, 2017

Hybrid computer

Hybrid computers are computers that exhibit features of analog computers and digital computers. The digital component normally serves as the controller and provides logical and numerical operations, while the analog component often serves as a solver of differential equations and other mathematically complex equations. The first desktop hybrid computing system was the Hycomp 250, released by Packard Bell in 1961. Another early example was the HYDAC 2400, an integrated hybrid computer released by EAI in 1963. Late in the 20th century, hybrids dwindled with the increasing capabilities of digital computers including digital signal processors.

In general, analog computers are extraordinarily fast, since they are able to solve most mathematically complex equations at the rate at which a signal traverses the circuit, which is generally an appreciable fraction of the speed of light. On the other hand, the precision of analog computers is not good; they are limited to three, or at most, four digits of precision.

Digital computers can be built to take the solution of equations to almost unlimited precision, but quite slowly compared to analog computers. Generally, complex mathematical equations are approximated using iterative methods which take huge numbers of iterations, depending on how good the initial "guess" at the final value is and how much precision is desired. (This initial guess is known as the numerical "seed".) For many real-time operations in the 20th century, such digital calculations were too slow to be of much use (e.g., for very high frequency phased array radars or for weather calculations), but the precision of an analog computer is insufficient.

Hybrid computers can be used to obtain a very good but relatively imprecise 'seed' value, using an analog computer front-end, which is then fed into a digital computer iterative process to achieve the final desired degree of precision. With a three or four digit, highly accurate numerical seed, the total digital computation time to reach the desired precisionis dramatically reduced, since many fewer iterations are required. One of the main technical problems to be overcome in hybrid computers is minimizing digital-computer noise in analog computing elements and grounding systems.

Consider that the nervous system in animals is a form of hybrid computer. Signals pass across the synapses from one nerve cell to the next as discrete (digital) packets of chemicals, which are then summed within the nerve cell in an analog fashion by building an electro-chemical potential until its threshold is reached, whereupon it discharges and sends out a series of digital packets to the next nerve cell. The advantages are at least threefold: noise within the system is minimized (and tends not to be additive), no common grounding system is required, and there is minimal degradation of the signal even if there are substantial differences in activity of the cells along a path (only the signal delays tend to vary). The individual nerve cells are analogous to analog computers; the synapses are analogous to digital computers.

Analog computer

⇰ An analog computer is a form of computer that uses the continuously changeable aspects of physical phenomena such as electrical,mechanical, or hydraulic quantities to model the problem being solved. In contrast, digital computers represent varying quantities symbolically, as their numerical values change. As an analog computer does not use discrete values, but rather continuous values, processes cannot be reliably repeated with exact equivalence, as they can with Turing machines. Unlike digital signal processing, analog computers do not suffer from the quantization noise, but are limited by analog noise.

Analog computers were widely used in scientific and industrial applications where digital computers of the time lacked sufficient performance. Analog computers can have a very wide range of complexity. Slide rules and nomographs are the simplest, while naval gunfire control computers and large hybrid digital/analog computers were among the most complicated. Systems for process control and protective relaysused analog computation to perform control and protective functions.

The advent of digital computing made simple analog computers obsolete as early as the 1950s and 1960s, although analog computers remained in use in some specific applications, like the flight computer in aircraft, and for teaching control systems in universities. More complex applications, such as synthetic aperture radar, remained the domain of analog computing well into the 1980s, since digital computers were insufficient for the task.

Networking and the Internet

Computers have been used to coordinate information between multiple locations since the 1950s. The U.S. military's SAGE system was the first large-scale example of such a system, which led to a number of special-purpose commercial systems such as Sabre. In the 1970s, computer engineers at research institutions throughout the United States began to link their computers together using telecommunications technology. The effort was funded by ARPA (now DARPA), and the computer network that resulted was called the ARPANET. The technologies that made the Arpanet possible spread and evolved.

In time, the network spread beyond academic and military institutions and became known as the Internet. The emergence of networking involved a redefinition of the nature and boundaries of the computer. Computer operating systems and applications were modified to include the ability to define and access the resources of other computers on the network, such as peripheral devices, stored information, and the like, as extensions of the resources of an individual computer. Initially these facilities were available primarily to people working in high-tech environments, but in the 1990s the spread of applications like e-mail and the World Wide Web, combined with the development of cheap, fast networking technologies like Ethernet and ADSL saw computer networking become almost ubiquitous. In fact, the number of computers that are networked is growing phenomenally. A very large proportion of personal computers regularly connect to the Internet to communicate and receive information. "Wireless" networking, often utilizing mobile phone networks, has meant networking is becoming increasingly ubiquitous even in mobile computing environments.

Block diagram of computer

Figure:- BLOCK DIAGRAM OF A COMPUTER

Computer contains five basic units

1) Memory Unit

2) Arithmetic Logic Unit (ALU)

3) Input Unit

4) Output Unit

5) Control Unit (CU)

2) Arithmetic Logic Unit (ALU)

3) Input Unit

4) Output Unit

5) Control Unit (CU)

- CENTRAL PROCESSING UNIT (CPU):

- CPU is the brain of a computer.

- Transform raw data into useful information.

- CPU is responsible for all Processing.

- It has two parts

2. Arithmetic Logic Unit (ALU):

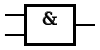

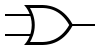

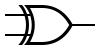

- It is the part where actual processing takes place.

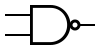

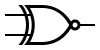

- It can perform Addition, Subtraction, Multiplication, Division, Square Roots (etc) and logic operations such as AND, OR (etc) on binary numbers.

3. Control Unit (CU):

- It tells the computer that, “What specific sequence of operations it must perform”.

- It also specifies timing of the instructions.

- Its function is to Fetch, Decode and Execute instructions that are stored in memory.

- It controls

4. Memory Devices

- Arithmetic Logic Unit

- All Input/Output Devices

5. Main Memory:

- Also called Primary memory or Internal memory.

- Memory is used to store data temporary or permanently.

- Data stored in memory can be used for required task.

- RAM and ROM are most commonly used as main memory

Hardware

The term hardware covers all of those parts of a computer that are tangible physical objects. Circuits, computer chips, graphic cards, sound cards, memory (RAM), motherboard, displays, power supplies, cables, keyboards, printers and "mice" input devices are all hardware.History of computing hardware

| First generation (mechanical/electromechanical) | Calculators | Pascal's calculator, Arithmometer, Difference engine, Quevedo's analytical machines |

| Programmable devices | Jacquard loom, Analytical engine, IBM ASCC/Harvard Mark I,Harvard Mark II, IBM SSEC, Z1, Z2, Z3 | |

| Second generation (vacuum tubes) | Calculators | Atanasoff–Berry Computer, IBM 604, UNIVAC 60, UNIVAC 120 |

| Programmable devices | Colossus, ENIAC, Manchester Small-Scale Experimental Machine,EDSAC, Manchester Mark 1, Ferranti Pegasus, Ferranti Mercury,CSIRAC, EDVAC, UNIVAC I, IBM 701, IBM 702, IBM 650, Z22 | |

| Third generation (discrete transistors and SSI, MSI, LSIintegrated circuits) | Mainframes | IBM 7090, IBM 7080, IBM System/360, BUNCH |

| Minicomputer | HP 2116A, IBM System/32, IBM System/36, LINC, PDP-8, PDP-11 | |

| Fourth generation (VLSI integrated circuits) | Minicomputer | VAX, IBM System i |

| 4-bit microcomputer | Intel 4004, Intel 4040 | |

| 8-bit microcomputer | Intel 8008, Intel 8080, Motorola 6800, Motorola 6809, MOS Technology 6502, Zilog Z80 | |

| 16-bit microcomputer | Intel 8088, Zilog Z8000, WDC 65816/65802 | |

| 32-bit microcomputer | Intel 80386, Pentium, Motorola 68000, ARM | |

| 64-bit microcomputer | Alpha, MIPS, PA-RISC, PowerPC, SPARC, x86-64, ARMv8-A | |

| Embedded computer | Intel 8048, Intel 8051 | |

| Personal computer | Desktop computer, Home computer, Laptop computer, Personal digital assistant (PDA), Portable computer, Tablet PC, Wearable computer |

Friday, April 28, 2017

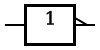

Logic gate

A logic gate is an idealized or physical device implementing a Boolean function; that is, it performs a logical operation on one or more binary inputs, and produces a single binary output.Web server

A web server is a computer system that processes requests via HTTP, the basic network protocol used to distribute information on theWorld Wide Web. The term can refer to the entire system, or specifically to the software that accepts and supervises the HTTP requests.Wednesday, April 26, 2017

Programming language

A programming language is a formal computer language designed to communicate instructions to a machine, particularly a computer. Programming languages can be used to create programs to control the behavior of a machine or to express algorithms.

Syntax

A programming language's surface form is known as its syntax. Most programming languages are purely textual; they use sequences of text including words, numbers, and punctuation, much like written natural languages. On the other hand, there are some programming languages which are more graphical in nature, using visual relationships between symbols to specify a program.

The syntax of a language describes the possible combinations of symbols that form a syntactically correct program. The meaning given to a combination of symbols is handled by semantics (either formal or hard-coded in areference implementation). Since most languages are textual, this article discusses textual syntax.

Programming language syntax is usually defined using a combination of regular expressions (for lexical structure) and Backus–Naur form (for grammatical structure). Below is a simple grammar, based on Lisp:

expression ::= atom | list

atom ::= number | symbol

number ::= [+-]?['0'-'9']+

symbol ::= ['A'-'Z''a'-'z'].*

list ::= '(' expression* ')'

Logic programming

A program is a set of premises, and computation is performed by attempting to prove candidate theorems. From this point of view, logic programs are declarative, focusing on what the problem is, rather than on how to solve it.

However, the backward reasoning technique, implemented by SLD resolution, used to solve problems in logic programming languages such as Prolog, treats programs as goal-reduction procedures. Thus clauses of the form:

- H :- B1, …, Bn.

have a dual interpretation, both as procedures

- to show/solve H, show/solve B1 and … and Bn

and as logical implications:

- B1 and … and Bn implies H.

Experienced logic programmers use the procedural interpretation to write programs that are effective and efficient, and they use the declarative interpretation to help ensure that programs are correct.

Functional programming

The principles of modularity and code reuse in practical functional languages are fundamentally the same as in procedural languages, since they both stem from structured programming. So for example:

- Procedures correspond to functions. Both allow the reuse of the same code in various parts of the programs, and at various points of its execution.

- By the same token, procedure calls correspond to function application.

- Functions and their invocations are modularly separated from each other in the same manner, by the use of function arguments, return values and variable scopes.

The main difference between the styles is that functional programming languages remove or at least deemphasize the imperative elements of procedural programming. The feature set of functional languages is therefore designed to support writing programs as much as possible in terms of pure functions:

- Whereas procedural languages model execution of the program as a sequence of imperative commands that may implicitly alter shared state, functional programming languages model execution as the evaluation of complex expressions that only depend on each other in terms of arguments and return values. For this reason, functional programs can have a free order of code execution, and the languages may offer little control over the order in which various parts of the program are executed. (For example, the arguments to a procedure invocation in Scheme are executed in an arbitrary order.)

- Functional programming languages support (and heavily use) first-class functions, anonymous functions and closures, although these concepts are being included in newer procedural languages.

- Functional programming languages tend to rely on tail call optimization and higher-order functions instead of imperative looping constructs.

Many functional languages, however, are in fact impurely functional and offer imperative/procedural constructs that allow the programmer to write programs in procedural style, or in a combination of both styles. It is common for input/output code in functional languages to be written in a procedural style.

There do exist a few esoteric functional languages (like Unlambda) that eschew structured programming precepts for the sake of being difficult to program in (and therefore challenging). These languages are the exception to the common ground between procedural and functional languages.

Object-oriented programming

The focus of procedural programming is to break down a programming task into a collection of variables, data structures, and subroutines, whereas in object-oriented programming it is to break down a programming task into objects that expose behavior (methods) and data (members or attributes) using interfaces. The most important distinction is that while procedural programming uses procedures to operate on data structures, object-oriented programming bundles the two together, so an "object", which is an instance of a class, operates on its "own" data structure.[2]

Nomenclature varies between the two, although they have similar semantics:

| Procedural | Object-oriented |

|---|---|

| procedure | method |

| record | object |

| module | class |

| procedure call | message |

Procedural programming

Procedural programming is a programming paradigm, derived from structured programming, based upon the concept of the procedure call. Procedures, also known as routines, subroutines, or functions (not to be confused with mathematical functions, but similar to those used in functional programming), simply contain a series of computational steps to be carried out. Any given procedure might be called at any point during a program's execution, including by other procedures or itself. The first major procedural programming languages first appeared circa 1960, including Fortran, ALGOL, COBOL and BASIC. Pascal and C were published closer to the 1970s, while Ada was released in 1980. Go is an example of a more modern procedural language, first published in 2009.

Computer processors provide hardware support for procedural programming through a stack register and instructions for calling procedures and returning from them. Hardware support for other types of programming is possible, but no attempt was commercially successful (for example Lisp machines or Java processors).

OOP in a network protocol

The messages that flow between computers to request services in a client-server environment can be designed as the linearizations of objects defined by class objects known to both the client and the server. For example, a simple linearized object would consist of a length field, a code point identifying the class, and a data value. A more complex example would be a command consisting of the length and code point of the command and values consisting of linearized objects representing the command's parameters. Each such command must be directed by the server to an object whose class (or superclass) recognizes the command and is able to provide the requested service. Clients and servers are best modeled as complex object-oriented structures. Distributed Data Management Architecture (DDM) took this approach and used class objects to define objects at four levels of a formal hierarchy:

- Fields defining the data values that form messages, such as their length, codepoint and data values.

- Objects and collections of objects similar to what would be found in a Smalltalk program for messages and parameters.

- Managers similar to AS/400 objects, such as a directory to files and files consisting of metadata and records. Managers conceptually provide memory and processing resources for their contained objects.

- A client or server consisting of all the managers necessary to implement a full processing environment, supporting such aspects as directory services, security and concurrency control.

The initial version of DDM defined distributed file services. It was later extended to be the foundation of Distributed Relational Database Architecture (DRDA).

Polymorphism

A form of polymorphism, is when calling code can be agnostic as to whether an object belongs to a parent class or one of its descendants. For example, a function might call "make_full_name()" on an object, which will work whether the object is of class Person or class Employee. This is another type of abstraction which simplifies code external to the class hierarchy and enables strong separation of concerns.Composition, inheritance, and delegation

Objects can contain other objects in their instance variables; this is known as object composition. For example, an object in the Employee class might contain (point to) an object in the Address class, in addition to its own instance variables like "first_name" and "position". Object composition is used to represent "has-a" relationships: every employee has an address, so every Employee object has a place to store an Address object.

Languages that support classes almost always support inheritance. This allows classes to be arranged in a hierarchy that represents "is-a-type-of" relationships. For example, class Employee might inherit from class Person. All the data and methods available to the parent class also appear in the child class with the same names. For example, class Person might define variables "first_name" and "last_name" with method "make_full_name()". These will also be available in class Employee, which might add the variables "position" and "salary". This technique allows easy re-use of the same procedures and data definitions, in addition to potentially mirroring real-world relationships in an intuitive way. Rather than utilizing database tables and programming subroutines, the developer utilizes objects the user may be more familiar with: objects from their application domain.

Subclasses can override the methods defined by superclasses. Multiple inheritance is allowed in some languages, though this can make resolving overrides complicated. Some languages have special support for mixins, though in any language with multiple inheritance, a mixin is simply a class that does not represent an is-a-type-of relationship. Mixins are typically used to add the same methods to multiple classes. For example, class Unicode Conversion Mixin might provide a method unicode_to_ascii() when included in class FileReader and class WebPage Scraper, which don't share a common parent.

Abstract classes cannot be instantiated into objects; they exist only for the purpose of inheritance into other "concrete" classes which can be instantiated. In Java, the

finalkeyword can be used to prevent a class from being subclassed.

The doctrine of composition over inheritance advocates implementing has-a relationships using composition instead of inheritance. For example, instead of inheriting from class Person, class Employee could give each Employee object an internal Person object, which it then has the opportunity to hide from external code even if class Person has many public attributes or methods. Some languages, like Go do not support inheritance at all.

The "open/closed principle" advocates that classes and functions "should be open for extension, but closed for modification".

Delegation is another language feature that can be used as an alternative to inheritance.

Objects and classes

Languages that support object-oriented programming typically use inheritance for code reuse and extensibility in the form of eitherclasses or prototypes. Those that use classes support two main concepts:

- Classes – the definitions for the data format and available procedures for a given type or class of object; may also contain data and procedures (known as class methods) themselves, i.e. classes contains the data members and member functions

- Objects – instances of classes

Objects sometimes correspond to things found in the real world. For example, a graphics program may have objects such as "circle", "square", "menu". An online shopping system might have objects such as "shopping cart", "customer", and "product".[7] Sometimes objects represent more abstract entities, like an object that represents an open file, or an object that provides the service of translating measurements from U.S. customary to metric.

Each object is said to be an instance of a particular class (for example, an object with its name field set to "Mary" might be an instance of class Employee). Procedures in object-oriented programming are known as methods; variables are also known as fields, members, attributes, or properties. This leads to the following terms:

- Class variables – belong to the class as a whole; there is only one copy of each one

- Instance variables or attributes – data that belongs to individual objects; every object has its own copy of each one

- Member variables – refers to both the class and instance variables that are defined by a particular class

- Class methods – belong to the class as a whole and have access only to class variables and inputs from the procedure call

- Instance methods – belong to individual objects, and have access to instance variables for the specific object they are called on, inputs, and class variables

Objects are accessed somewhat like variables with complex internal structure, and in many languages are effectively pointers, serving as actual references to a single instance of said object in memory within a heap or stack. They provide a layer of abstraction which can be used to separate internal from external code. External code can use an object by calling a specific instance method with a certain set of input parameters, read an instance variable, or write to an instance variable. Objects are created by calling a special type of method in the class known as a constructor. A program may create many instances of the same class as it runs, which operate independently. This is an easy way for the same procedures to be used on different sets of data.

Object-oriented programming that uses classes is sometimes called class-based programming, while prototype-based programming does not typically use classes. As a result, a significantly different yet analogous terminology is used to define the concepts of object and instance.

In some languages classes and objects can be composed using other concepts like traits and mixins.

Object-oriented programming (OOP)

Object-oriented programming (OOP) is a programming paradigm based on the concept of "objects", which may contain data, in the form of fields, often known as attributes; and code, in the form of procedures, often known as methods. A feature of objects is that an object's procedures can access and often modify the data fields of the object with which they are associated (objects have a notion of "this" or "self"). In OOP, computer programs are designed by making them out of objects that interact with one another.There is significant diversity of OOP languages, but the most popular ones are class-based, meaning that objects are instances of classes, which typically also determine their type.

Many of the most widely used programming languages (such as C++, Delphi, Java, Python etc.) are multi-paradigm programming languages that support object-oriented programming to a greater or lesser degree, typically in combination with imperative,procedural programming. Significant object-oriented languages include Java, C++, C#, Python, PHP, Ruby, Perl, Object Pascal,Objective-C, Dart, Swift, Scala, Common Lisp, and Smalltalk.

Parallel array

A group of parallel arrays (also known as structure of arrays or SoA) is a form of implicit data structure that uses multiple arrays to represent a singular array of records. It keeps a separate, homogeneous data array for each field of the record, each having the same number of elements. Then, objects located at the same index in each array are implicitly the fields of a single record. Pointers from one object to another are replaced by array indices. This contrasts with the normal approach of storing all fields of each record together in memory (also known as array of structures or AoS). For example, one might declare an array of 100 names, each a string, and 100 ages, each an integer, associating each name with the age that has the same index.

An example in C using parallel arrays:

int ages[] = {0, 17, 2, 52, 25};

char *names[] = {"None", "Mike", "Billy", "Tom", "Stan"};

int parent[] = {0 /*None*/, 3 /*Tom*/, 1 /*Mike*/, 0 /*None*/, 3 /*Tom*/};

for(i = 1; i <= 4; i++) {

printf("Name: %s, Age: %d, Parent: %s \n",

names[i], ages[i], names[parent[i]]);

}

in Perl (using a hash of arrays to hold references to each array):

my %data = (

first_name => ['Joe', 'Bob', 'Frank', 'Hans' ],

last_name => ['Smith','Seger','Sinatra','Schultze'],

height_in_cm => [169, 158, 201, 199 ]);

for $i (0..$#{$data{first_name}}) {

printf "Name: %s %s\n", $data{first_name}[$i], $data{last_name}[$i];

printf "Height in CM: %i\n", $data{height_in_cm}[$i];

}

Or, in Python:

first_names = ['Joe', 'Bob', 'Frank', 'Hans' ]

last_names = ['Smith','Seger','Sinatra','Schultze']

heights_in_cm = [169, 158, 201, 199 ]

for i in range(len(first_names)):

print "Name: %s %s" % (first_names[i], last_names[i])

print "Height in CM: %s" % heights_in_cm[i]

# Using zip:

for first_name, last_name, height in zip(first_names, last_names, heights_in_cm):

print "Name: %s %s" % (first_name, last_name)

print "Height in CM: %s" % height_in_cm

Subscribe to:

Comments

(

Atom

)